I work with many enterprise level organizations in the Midwestern United States. There is a lot of excitement around Power BI, however I often run into challenges regarding features that are expected with a business intelligence solution that can meet the needs of an enterprise such as governance, administration, and lifecycle management.

In Business Intelligence, there is a pendulum that swings between self-service and governance that wreaks havoc on most organizations of reputable size. I can confidently say there is little doubt from an end user feature set that Power BI competes well with any solution on the market. With a FREE and PRO offering at only 9.99 a month per user, the price point is hard to beat. But what about total cost of ownership (TCO)? What about the enterprise deployments of Power BI? I intend to use this blog to help with answering these questions regarding Power BI, SSAS Tabular models, and an overall agile approach to business intelligence within the enterprise.

Let’s start with discussing lifecycle management and taking reports through development, testing, and production similar to how you would deploy a codebase for an application.

First of all, we need to ensure that we are working with the PRO version of Power BI. Yes, if you want Enterprise level features, you are going to have to pay for it. Two PRO features are being used below: Power BI Group Workspaces and Content Packs.

Group Workspaces in Power BI allow for multiple users to “co-author” reports and share ownership over the Power BI content. You can easily create a group workspace from the left hand navigation bar.

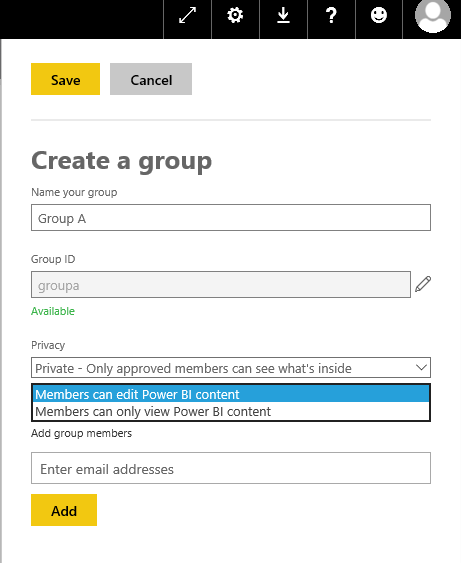

Once a group has been created, you can assign users with either “Edit” or “View Only” access. This will be an important distinction for users you assign to this group between those who will be “co-authoring” and those who will be testing and doing quality assurance.

Once a group has been established with distinction between the content developers and the testers, the below diagram can be followed to achieve a content management workflow.

This diagram is also provided in pdf form below:

Power BI Content Workflow

In this workflow, One Drive For Business (ODFB) is used as a collaboration space for PBIX files. Alternatively, this could as be a source control environment or a local file share. A benefit in O365 deployments for Power BI is that when a group is created, a ODFB directory is also established for sharing.

The group workspace is used for collaboration between content authors and testers. Once development and testing iterations are complete, a Content Pack should be produced to distribute the production version of the dashboard, reports, and datasets to the rest of the organization. Content Packs are created by going to “Get Data” -> “My Organization” -> “Create Content Pack”.

IMPORTANT: It is critical that in an enterprise scenario that personal workspaces are not used to publish content packs. This creates a dependency on a single author to have full control over content and scenarios will consistently pop up that a single author may not be available when updates are needed on the content. Enterprise deployment scenarios can’t be achieved when content is tied to a personal workspace. Establish a group workspace structure that makes sense for your organization as pointed out in the Pre-Requisite text on the workflow diagram.

Making Revisions

As revisions occur on the content in the group workspace a notification appears in the upper right reminding that there is a content pack associated with the dashboard or report being modified and that an update needs to be applied to the content pack to publish the changes.

I have talked with some organizations that originally considered bypassing the use of content packs and just were going to use group workspaces with “View Only” users getting the content distribution. This would not allow for the option to iterate on changes before they are published to the end users.

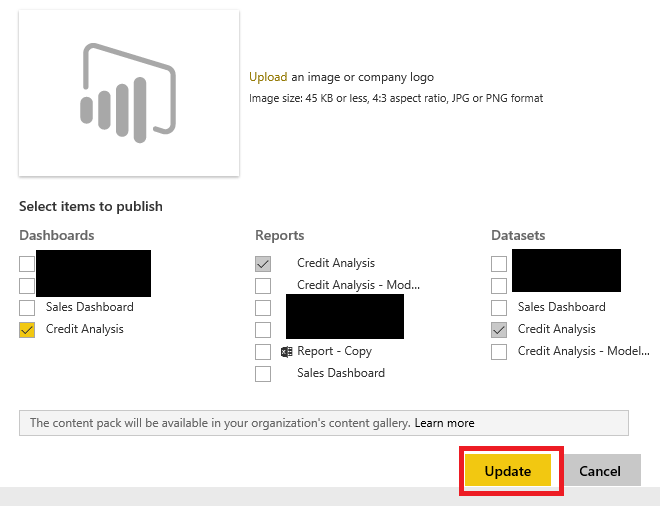

Once the iterations have been completed and the next revision is ready to publish, anyone with “Edit” privileges in the group can go to Settings (the gear icon in upper right) and “View Content Packs”.

A warning icon is displayed next to the content pack name indicating there are pending changes. Once you click “Edit” you will have the option to “Update” the content pack in which the changes will be distributed to those that have access to it.

Connection Strings and Separation of Duties (SoD)

The above workflow still leaves a couple of desired features without answers.

With the group workspaces, we have separated the ability to develop and test report changes from publishing them to the rest of the organization, but how do we transition from a DEV/TEST data source to a PROD data source? This has to be achieved within the Power BI Desktop file (as of the time this blog post was written). If it is a new calculation or set of tables that are being tested, collaboration will have to occur between the development team and the testers as to when to change the connection strings from DEV/TEST to PROD sources and republish the PBIX file.

In my experience, often on the reporting and analytics side of things, production connectivity is required by the “power users” in each department that are doing the quality assurance as they can only tell if new report features will pass the “sniff test” when they are connected to data that they know. DEV/TEST environments often grow stale and don’t hold as much value on the reporting/analytics side as they do for application development. Connection string management will be enhanced in Power BI as the product matures, but even then, limited value will be achieved without the production data to verify changes.

Lastly, when we look at the fact that a group workspace only has an “Edit” and “View” role and lacks a “Publish” role, it may be of concern that a content author can push changes for content packs without separation of duty (which may require someone else to actually publish the change). If this is very critical to your business, then the separation of duty can be achieved by adding another group workspace to the mix that only publishers can have access to, but I would suggest this is often overkill. Because reporting cannot “alter” any underlying data, it does not as often have as strict of controls in place to protect from malicious code being deployed such as a transactional application may have. And remember, there is a significant amount of any enterprise organization that is still running their business from Excel spreadsheets being produced and managed by the “power users” in each department. They simply extract data from source systems and create Excel reports that often go all the way to the CFO or CEO in the organization. So as we come back to the initial comment in this blog regarding the pendulum of self-service vs governance, remember that these concerns probably already exist in your organization today with much less visibility than they would when using the proposed workflow mentioned above.

Feel free to leave any comments on this article or suggestions for future articles that touch on the use of Microsoft BI solutions such as Power BI for enterprise deployment.

Awesome first content. I’ll be following this blog pretty closely. Congrats Mr. Angry Analytics Blogger!

LikeLike

Nice Work, Steve! It is always empowering to be able to answer a client’s questions…”what is the expected value Microsoft solutions can deliver them and why should they care?” Thanks for giving us some ammo for Power BI Pro.

Looking forward to future posts.

LikeLike

Great post and good topic! Power BI is maturing at a fast pace, it great to hear how it should be managed in an Enterprise setting. I look forward to future posts. Great job!

LikeLike

Great post Steve. Kudos on the new blog.

LikeLike

Usefull and practical post that opens our mind on how Power Bi content could be used in an organization. Look forward to next Post.

LikeLike

Nice way to start the blog! Look forward to the next one!

LikeLike

Awesome post Steve. I will be a frequent visitor at your blog. Thanks.

LikeLike

“Hello there! Do you use Twitter? I’d like to follow you if that would be ok. I’m definitely enjoying_ your blog and look forward to new posts.”

LikeLike

yes, @angryanalytics

LikeLike

Great post! I wish I could see it before I took two weeks to figure out the similar/rough work process for my team.

LikeLike

Do you find that access to Content Packs is too difficult for data consumers? Sharing from a report that is saved to a group workspace gives the data consumer a link they can follow and immediately dive into the report. It’s my impression that if we are create content packs then there is an additional step people will need to take after logging into Power BI and that may reduce adoption.

LikeLike

true, i do find that some customers avoid content packs specifically for this reason. However, the downside is that every time you modify your dashboard/report your users are seeing those updates. You do not get the separation between development and production like you do with content packs. i do think this area of the product is evolving and good things are to come in 2017.

LikeLike

Fantastic post. Thanks for sharing this information!

LikeLike

And what about row level security and content packs?

Could this workflow be used in this scenario when everybody needs to see different data?

LikeLike

yes, you would just need to add the row level security piece in the report and once published via a content pack, those users will adhere to the rolls defined for their AD accounts

LikeLike

You really make it seem really easy together with your presentation however I find this matter to be really one thing which I think I might by no means understand. It seems too complicated and extremely wide for me. I’m having a look forward to your next submit, I will attempt to get the grasp of it!

LikeLike

please see my updated entry on this topic: https://angryanalytics.com/index.php/2017/05/11/power-bi-content-workflow-with-apps/

LikeLike

I am trying to determine the best practice for dev and prod environment. Using your method, this would only need a single data gateway for dev and prod?

LikeLike

sorry for the late reply. Yes, you would only need one gateway and could serve both. Sometimes your corporate firewall rules will not allow for you to connect to both dev and prod environments from the same VM so that could require you to split to multiple gateways. Also, load considerations may be a concern to serve both from a single machine…

LikeLike

Nice post very usefull

LikeLike

Hi!showard i wonder if you can’t help me i want to costmize the Actuals vs Budget Mirosoft report for my organization by adding some other data source (data entity) my problem is we can’t merge the direcquery and import so I have the standar report use a direct query but if I wana make my changes i will e need to ad an odata feed that an import mod if I switch to the import mod how I can deploy my PIBX to the prod environment any help from you I will be appreciate

LikeLike

currently direct query cannot be combined with other queries, so if you select a DQ source you can no longer add any other sources to that file. I always suggest using Import mode for this reason (as long as you can make decisions from data that may be a few hours old).

LikeLike

But My question it is possible with the import mode to do the step of deployment trought the LCS because there is issues with the data gatway How i can do it in this case

LikeLike

Thanks for a great and relevant article!!

Did not see a publication date?

I am writing as of 1/8/2018. What is the current status of “managing” connection strings?

And any changes in deploying from Dev to Prod?

LikeLike

Hi, connection string changes have become much easier since my original post. Simply go to “Edit Queries” in PBI Desktop, select the “Data Source Settings” from the drop down and then simply click the “Change Source” button in the dialog. There is also a REST API that you can call as part of your DevOps process to do this. https://msdn.microsoft.com/en-us/library/mt814715.aspx

LikeLike